Introduction

Most of the large organisations have built a certain organisational culture around how they build, operate and integrate the systems they own which leads to boundaries between teams on what they are responsible for. Over the years I have seen a pattern of organisation hierarchy where the integration systems such as enterprise service bus, API gateway and other messaging appliances are managed by a central team for clear ownership of technology standards and for its benefits from economies of scale in operation.This article covers API gateway deployment patterns for organisations with such central integration teams. and towards the end article there is a reference table that summarises the capabilities of each option.

This article doesn't cover following in detail:

- Perimeter security measures implemented in load balancers

- Applications hosted on public cloud/container orchestration platforms (mostly falls into option1 pattern)

The different patterns covered in the article:

1. API gateway as a lean pipe (option 1)

2. API gateway as a lean pipe with load balancing (option 2)

3. API gateway with service registry/service discovery capability (option 3)

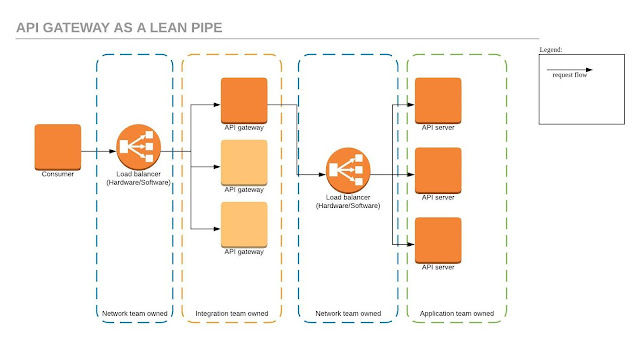

API gateway as a lean pipe (option 1)

This is a common pattern teams default to. Mostly due to its the natural extension of their existing team boundaries. In this pattern we don't use load balancing, health checking and circuit breaking capabilities that are provided by the api gateway.

Situations such as capacity increases, health based routing, blue-green deployments and DR fail over need to be managed via the load balancer config for each application.

API gateway as a lean pipe with load balancing (option 2)

This is a common pattern for non-critical applications that is build with fixed capacity in mind. In this pattern we use load balancing, health checking and circuit breaking capabilities that are provided by the api gateway, however this leads to static registration of API servers at the API gateway and it makes managing situations such as capacity increases, blue-green deployments and DR fail over very complex to manage operationally as API gateway config needs to be updated in each situation.

API gateway with service registry/service discovery capability (option 3)

This pattern is very similar to option2. It uses service registry and service discovery to minimise the config changes needed by the API gateway thus making is very easy for the applications teams to manage situations such as capacity increases, blue-green deployments and DR fail over quite seamlessly.

Summary

There is no one-size-fit-all solution for API Gateway deployments. Each integration scenario needs to be evaluated carefully and designed for to ensure right capabilities of the gateway are used to deliver the expected outcome. the following table summarises the article.| Criteria |

Option 1

|

Option 2

|

Option 3

|

| Authentication management at the gateway |

Yes

|

Yes

|

Yes

|

| Rate limiting / throttling at the gateway |

Yes

|

Yes

|

Yes

|

| Header based routing at the gateway |

Yes

|

Yes

|

Yes

|

| Circuit breaking at the gateway |

No

|

Yes

|

Yes

|

| Load balancing / health checking at the gateway |

No

|

Yes

|

Yes

|

| DNS/lookup based routing at the gateway |

No

|

No

|

Yes

|

| No of components a request pass through |

5

|

4

|

3

|

| Single point of failures |

2

|

1

|

0

|

| Capacity increase / failover logic in gateway config |

No

|

Yes

|

No

|

How does your organisation tackle API gateway deployment? Join the conversation and leave a comment.